Cloud Native Codex CLI Quick Start Guide

Welcome to Cloud Native Codex CLI! This is a powerful AI programming assistant based on official Codex configuration subscription service.

System Requirements

| Requirement | Details |

|---|---|

| Operating System | macOS 12+, Ubuntu 20.04+/Debian 10+ or Windows 11 through WSL2 |

| Git (optional, recommended) | 2.23+ with built-in PR assistant |

| Memory | Minimum 4 GB (8 GB recommended) |

1. Install Codex CLI

Choose one method:

npm (universal)

npm i -g @openai/codex

# Or in cases where native package name is needed:

# npm i -g @openai/codex@native

codex --versionHomebrew (macOS)

brew update

brew install codex

codex --versionIf

codexfails to run or your Node version is too old, please upgrade Node (commonly need Node 22+), or install via Homebrew instead.

2. Prepare GPTMeta Pro API Key and Configure Codex

Register GPTMeta Pro API Account

Step 1: Visit Registration Page

- Visit https://coultra.blueshirtmap.com

- Click the registration button to create a new account

- Fill in the necessary registration information

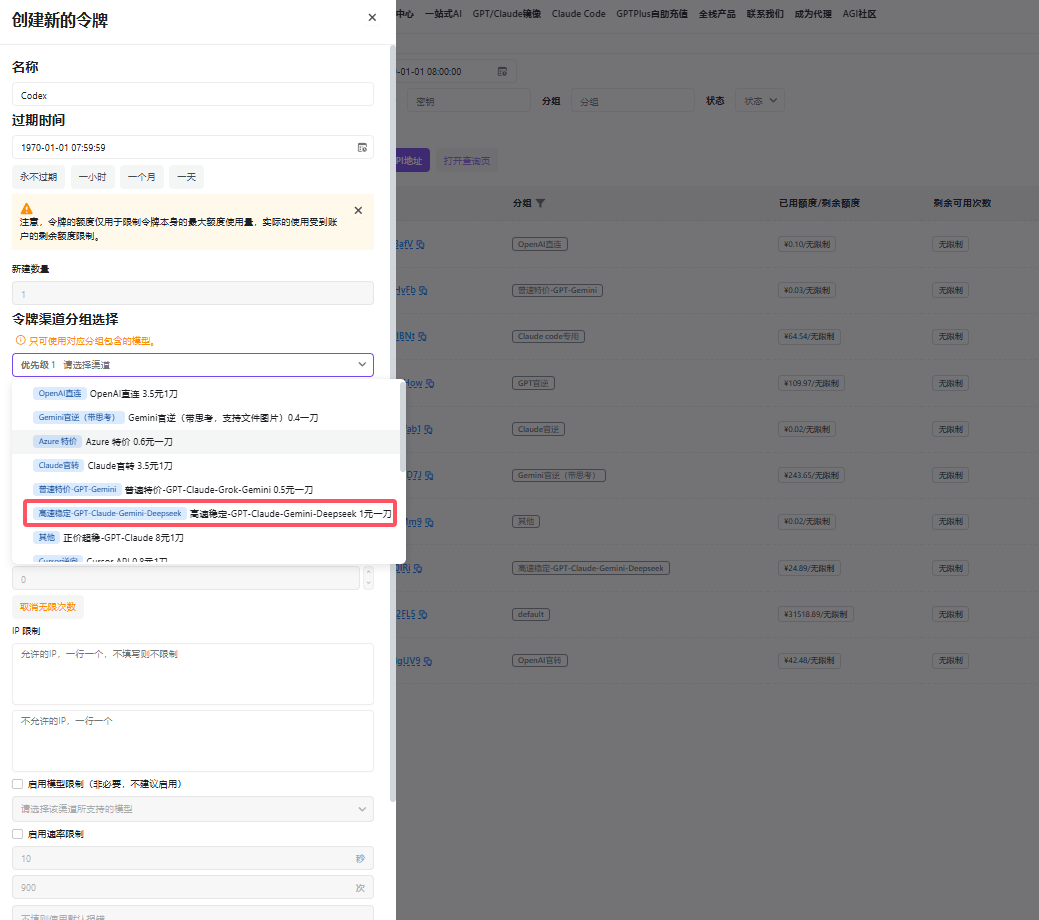

Step 2: Create API Key

- After successful login, go to the API key management page

- Create a new API key group

3. Select "High-speed Stable Channel" as the group name 4. Generate and copy your API key

3. Select "High-speed Stable Channel" as the group name 4. Generate and copy your API key

Configure Codex

Codex reads config.toml from ~/.codex/ at startup. Create one if it does not exist:

mkdir -p ~/.codex

nano ~/.codex/config.tomlAdd the following to config.toml (modify as needed):

# Top-level defaults

model = "gpt-4o" # Fill in per actual models available on GPTMeta Pro API

model_provider = "coultra" # Set default provider

[model_providers.coultra]

name = "GPTMeta Pro API (OpenAI-compatible)"

base_url = "https://coultra.blueshirtmap.com/v1"

env_key = "your_GPTMeta_Pro_API_key" # Directly fill in your API key

wire_api = "chat" # Use OpenAI Chat Completions wire protocol

# Optional: define a profile for quick CLI switching

[profiles.coultra]

model_provider = "coultra"

model = "gpt-4o"

approval_policy = "on-request" # Ask when needed

sandbox_mode = "workspace-write" # Allow writing in current workspace; still offlineKey field notes:

model/model_provider: default model and provider.[model_providers.<id>].base_url: your service/v1root; Codex will interact via Chat Completions wire protocol (typicallyPOST {base_url}/chat/completions).env_key: tells Codex which environment variable to fetch credentials from.wire_api: wire protocol type; use"chat"for Chat Completions compatibility.profiles.*and--profile: package a set of configs for quick runtime switching.

3. Run and verify

Ensure the current terminal has COULTRA_API_KEY (see step 2), then:

# Run with profile

codex --profile coultra "Explain the current repository structure in English"

# Or run with defaults (provider already set to coultra)

codex "Generate a Python script to fetch data from an API and save it as CSV"During execution, Codex will "read code, modify files, and run commands" in a local sandbox. When permissions are needed, it prompts per approval_policy; workspace-write allows writing only within the project directory while still staying offline.

4. Sandbox / Approval policy quick reference

Approval policy:

--ask-for-approvalor configureapproval_policyto control interaction level.--full-autois a convenience flag (less interruptions, still sandboxed).

Sandbox levels:

read-only: read-only (no writes, offline)workspace-write: can write within project directory, still offlinedanger-full-access: not recommended, equivalent to disabling sandbox- CLI uses

--sandbox MODE; config usessandbox_mode="MODE".

Need temporary network access? The current version emphasizes "offline-by-default" safety. Use the "danger" mode or future network options with caution.

5. FAQ

① 401/403: API Key invalid or insufficient balance

- Regenerate a key in GPTMeta Pro API dashboard; ensure

COULTRA_API_KEYis present in the current session (echo $COULTRA_API_KEY). - Inject environment variables via CI Secrets to avoid hardcoding.

② 404 / "Resource not found": incorrect base_url

- Most compatible implementations require

base_urlpoints to…/v1; some platforms (e.g., Azure) need additional path segments. Incomplete paths cause 404.

③ --profile not applied or some keys missing

- Upgrade to a newer version; older versions had known issues loading some profile keys.

④ After npm install, codex not available or wrong version

- Upgrade Node (recommend 22+), or install via Homebrew. Recent versions changed npm packaging and behavior.

⑤ Local/third-party provider unreachable or wrong port

- Confirm

base_url; early versions had bugs aroundbase_url/port that can be resolved by upgrading.

6. A minimal viable configuration (MVP) you can reuse

# ~/.codex/config.toml

model = "gpt-4o"

model_provider = "coultra"

[model_providers.coultra]

name = "GPTMeta Pro API (OpenAI-compatible)"

base_url = "https://coultra.blueshirtmap.com/v1"

env_key = "your_GPTMeta_Pro_API_key" # Directly fill in your API key

wire_api = "chat"

[profiles.coultra]

model_provider = "coultra"

model = "gpt-4o"

approval_policy = "on-request"

sandbox_mode = "workspace-write"Start command:

export COULTRA_API_KEY="your-key"

codex --profile coultra "Add a CLI subcommand to this repository"7. Advanced Usage Tips

CLI Reference

| Command | Purpose | Example |

|---|---|---|

codex | Interactive TUI | codex |

codex "..." | Interactive TUI with initial prompt | codex "fix lint errors" |

codex exec "..." | Non-interactive "automation mode" | codex exec "explain utils.ts" |

Non-interactive/CI Mode

Run Codex headlessly in pipelines. Example GitHub Action step:

- name: Update changelog via Codex

run: |

npm install -g @openai/codex

export OPENAI_API_KEY="${{ secrets.OPENAI_KEY }}"

codex exec --full-auto "update CHANGELOG for next release"Model Context Protocol (MCP)

Codex CLI can be configured to use MCP servers by defining an mcp_servers section in ~/.codex/config.toml. It is designed to mirror how tools like Claude and Cursor define mcpServers in their respective JSON configuration files, though Codex's format is slightly different since it uses TOML rather than JSON, e.g.:

# IMPORTANT: the top-level key is `mcp_servers` rather than `mcpServers`.

[mcp_servers.server-name]

command = "npx"

args = ["-y", "mcp-server"]

env = { "API_KEY" = "value" }